Compilation Process

1. Text Selection

There are two main criteria for text selection: (1) popularity and distribution of the grammar books and (2) the variety in function, audience, and text type. Popularity and distribution of the grammars is determined by

- bibliographic listings of grammar books (e.g. in Michael 1987, Görlach 1998)

- numbers of editions

- book catalogues, advertisements, etc.

- contemporaries' comments, e.g. in literary genres, private letters

- curricula of schools, colleges, etc.

All corpus texts pass through the compilation steps described below.

2. Text Acquisition

There are two main ways how texts are acquired in this project. Either we resort to digital archives (e.g. EEBO, ECCO) or we digitise texts from original printed material.

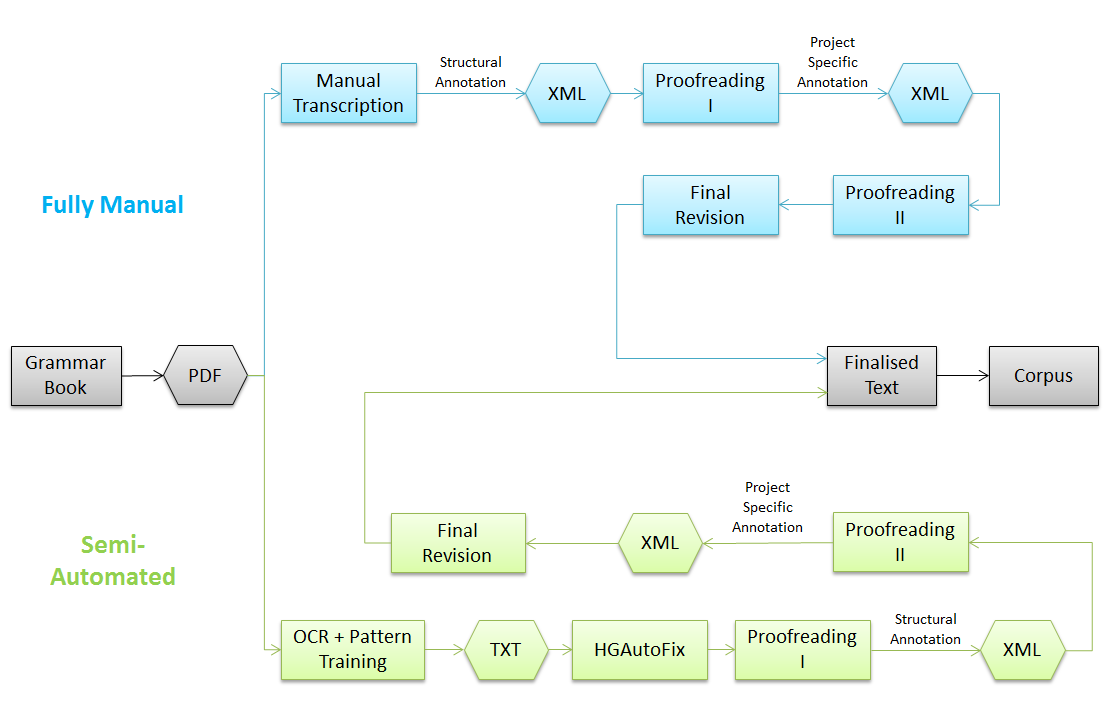

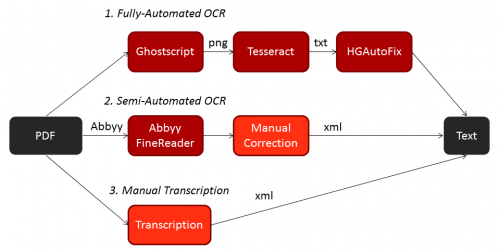

3. Digitisation

During fully-automated OCR, correction is automatically done via HGAutoFix. In types two and three, correction is done manually by research assistants.

Fully-automated OCR should only be considered under conditions of highly limited resources. Nevertheless, we were able to gain significant results from corrupted OCR data as was shown at ICEHL 19. For in-depth analyses of the data, semi-automated OCR or manual transcription is advisable.

4. Proofreading

Every text is proofread three times to ensure quality. The proofreading is done by different people in order to minimise risks of data corruption and to establish intersubjectivity.

Normalisation, e.g. standardising format, follows a set of normalisation rules.

5. Markup I - Structure

In Markup I, structural elements of the text are annotated in XML. This includes, for example, headings, paragraphs, and tables.

After 'Markup I', the grammar will be made available on the website to allow interested parties early access to the data.

6. Markup II - Project-Specific Annotation

In Markup II, project specific annotation is added. This includes, for instance, the markup of authors' references to other grammars.

7. Final Revision

The final revision is the last proofreading process and assures the quality of data and markup. This will be done by the editors themselves and not by student assistants.

8. Finalisation

Once a grammar has been 'finalised', it will enter into the next major release of the corpus. These will be published by the Competence Centre for Research Data at Heidelberg University.